"Raytraced primitives in unity3d: sphere"

Posted on 2016-03-24 in shaders

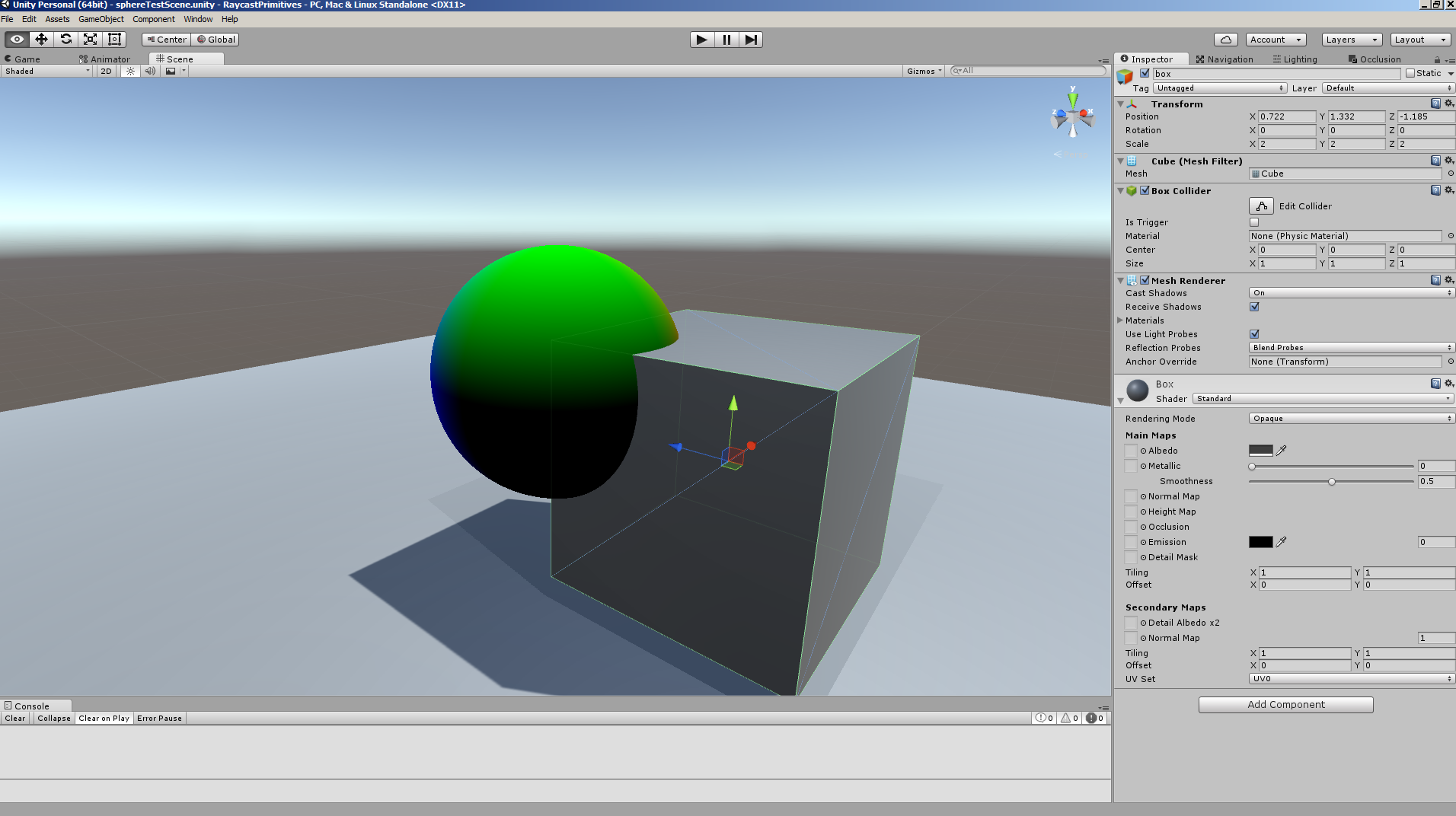

Alright, quick question. How many triangles are in this sphere?:

The answer is 12.

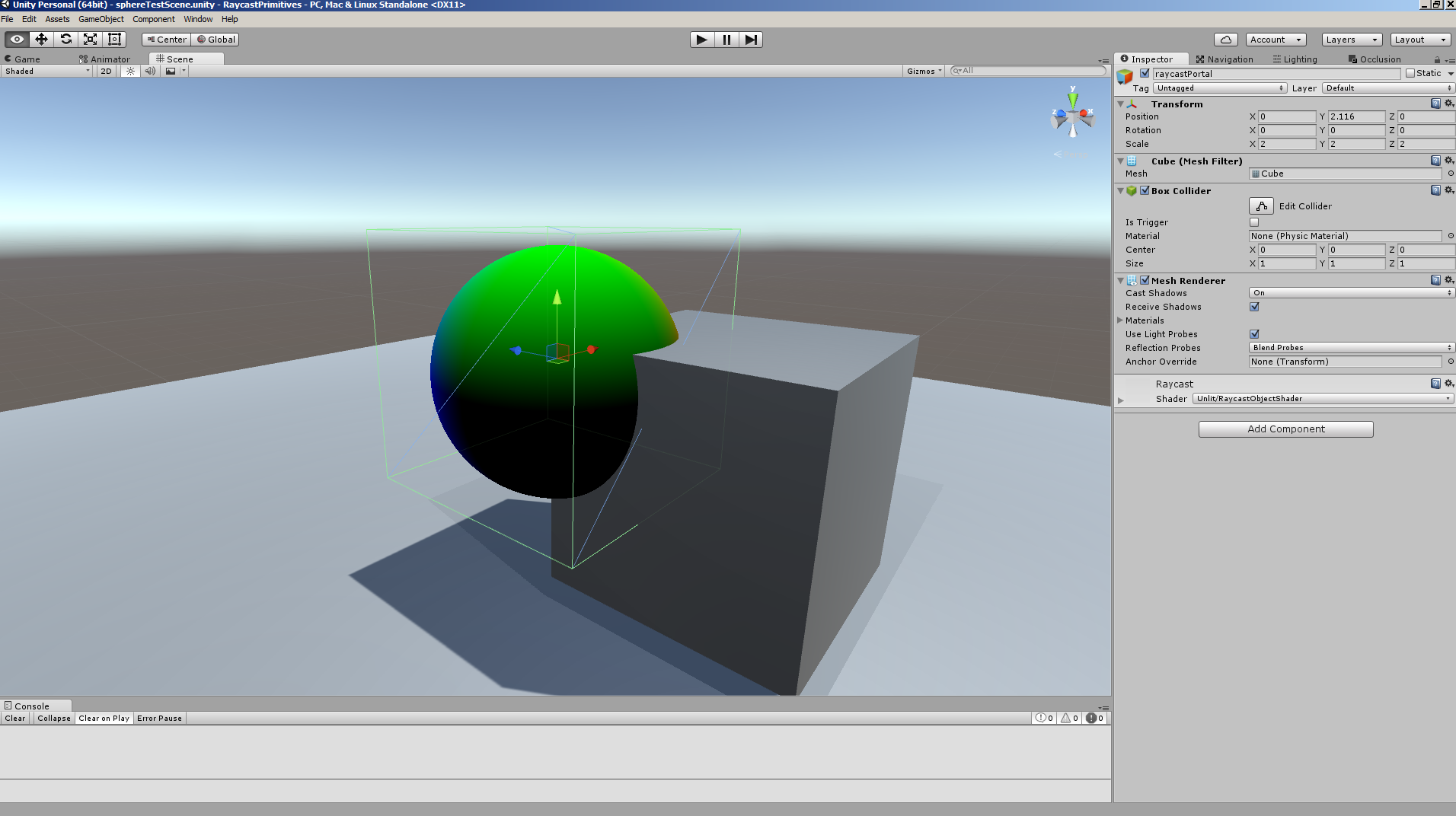

The sphere is a raytraced primitive, which is actually a box (boundaries of the box are indicated by green wireframe). That's why it has 12 triangles.

Unity 3d shader system, despite having numerous flaws, allows easy inclusion of those kind of uncommon objects.

I've always had some interest in raytracing and non-polygonal rendering, so that's why I decided to write this post.

The shaders:

Raycast.cginc:

float getDistanceToSphere(float3 rayPos, float3 rayDir, float3 spherePos, float sphereRadius){

float3 diff = spherePos - rayPos;

float r2 = sphereRadius;

float3 projectedDiff = dot(diff, rayDir)*rayDir;

float3 perp = diff - projectedDiff;

float perpSqLen = dot(perp, perp);

float perpSqDepth = r2 - perpSqLen;

if (perpSqDepth < 0)

return -1.0;

//return -1.0;

float dt = sqrt(r2 - perpSqLen);

float t = sqrt(dot(projectedDiff, projectedDiff)) - dt;

return t;

}

struct ContactInfo{

float3 relPos;

float3 n;

};

ContactInfo calculateSphereContact(float3 worldPos, float3 spherePos, float sphereRadius){

ContactInfo contact;

contact.relPos = worldPos - spherePos;

contact.n = normalize(contact.relPos);

return contact;

}

float calculateFragmentDepth(float3 worldPos){

float4 depthVec = mul(UNITY_MATRIX_VP, float4(worldPos, 1.0));

return depthVec.z/depthVec.w;

}

RaycastObjectShader.shader:

Shader "Unlit/RaycastObjectShader"{

Properties {

_MainTex ("Texture", 2D) = "white" {}

}

SubShader {

Tags { "RenderType"="Opaque" }

LOD 100

Pass{

Tags {"LightMode" = "ForwardBase"}

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// make fog work

//#pragma multi_compile_fog

#include "UnityCG.cginc"

#include "Raycast.cginc"

struct appdata{

float4 vertex : POSITION;

};

struct v2f{

float4 vertex : SV_POSITION;

float3 rayDir: TEXCOORD0;

float3 rayPos: TEXCOORD1;

float depth: TEXCOORD2;

};

sampler2D _MainTex;

float4 _MainTex_ST;

v2f vert (appdata v){

v2f o;

o.vertex = mul(UNITY_MATRIX_MVP, v.vertex);

float3 worldPos = mul(_Object2World, v.vertex);

o.rayPos = worldPos;

o.rayDir = worldPos - _WorldSpaceCameraPos;

o.depth = worldPos.z;

//o.uv = TRANSFORM_TEX(v.uv, _MainTex);

//UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

struct FragOut{

float4 col: SV_Target;//COLOR0;

float depth: SV_Depth;//DEPTH;

};

FragOut frag (v2f i){// : SV_Target{

float3 rayDir = normalize(i.rayDir);

float3 rayPos = i.rayPos;

fixed4 col = 1.0;//tex2D(_MainTex, i.uv);

float3 spherePos = float3(0.0, 2.0, 0.0);

float sphereRadius = 1.0;

float t = getDistanceToSphere(rayPos, rayDir, spherePos, sphereRadius);

if (t < 0)

discard;

float3 worldPos = rayPos + rayDir*t;

ContactInfo sphereContact = calculateSphereContact(worldPos, spherePos, sphereRadius);

col.xyz = sphereContact.n;

FragOut result;

result.col = col;

result.depth = calculateFragmentDepth(worldPos);

return result;

}

ENDCG

}

}

//FallBack "Diffuse"

}

Now, the theory and some explanations:

I do not want to render entire scene via raycasting, and want to seamlessly integrate a raytraced object with the rest of the unity scene.

Because of this, I'll create "portal" objects. A "portal" is any geometric primitive that has raycasting shader on it.

Raycasting shader will discard unused pixels, and for used pixels it will replace color contents with whatever is rendered within the portal.

For that reason, you really don't want portal objects to be occluders. So, a window that allows you to look into "raytraced" world.

Currently, there's no lighting (that one can be integrated), and raytraced objects do not cast shadows (I sorta expected to make this work fairly quickly, but it turns out that shadow rendering shader interacts with camera in mysterious ways, so I'll need to look into this later). Due to a silly typo, I spent quite a bit of time figuring out correct way to write new depth into shader. Because of that, explanations are going to be a bit short.

Now. Major functions are within Raycast.cginc, simply because they'll need to be called from multiple places. If we want to add lighting, for example, we'll need more than one forward pass, and copy-pasting entire block of code for collision testing from one pass into another is really not the right to do it.

Some details.

The vertex shader is fairly simple. It simply calculates a vector from camera to point on the mesh, and passses it, unnormalized, to fragment shader.

"Unnormalized" part is important. You see, any data that is passed from vertex to fragment shader is interpolated across faces being drawn.

Now, the reason why you don't want to normalize directional vectors within vertex shader is because normalized vectors do not interpolate properly.

If you grab a piece of paper and try interpolating "worldPos - cameraPos", vs "normalize(worldPos - cameraPos)", you'll quickly see the problem.

The bulk of work is done in the fragment shader. The shader is by no means optimal, but it does the job for now. I normalize ray direction, and perform collision check via getDistanceToSphere() subroutine. That subroutine is hardwired to return -1.0f if there's no intersection (that's not exactly optimal, because we use flow contorl twice and could just discard the pixel at this point), otherwise it computes t value for intersection point. "t" is the distance towards intersection point.

Said "t" value is then used to compute world position of the intersection point, which is then used to calculate depth (via calculateFragmentDepth() function), and sphere normal (via calculateSphereContact()).

For the moment I simply used normal value as sphere color.

I'll try to continue the subject of raytraced object at later date, and hopefully will write better examples.

For today, however, this is all.