"Semi-automatic shape transfer between object topologies using 'equivalence maps'"

Posted on 2016-03-23 in programming

Consider this scenario: you're making a game and are using some character creation system, like MakeHuman, Mixamo Fuse, or something similar.

Now, imagine that you're in a situation where you created a nice character in one system, but need to transfer it to another. For example, from MakeHuman to Mixamo Fuse. For example, you need to use topology of the other system, or the other system is integrated into your game, while this one isn't, etc.

If there's just one character, you can do retopology. Drag individual vertices of the target topology to match shape of the original object. While you might be able to speedup the process with something like blender's Shrinkwrap modifier, it'll still take some time.

Now, if there's more than one character that needs transfer, you're in trouble, because transferring each character to target topology will take quite some time.

Long story short, I figured out a general approach for automating this kind of process. The issue is, that someone will still need to do one topology transfer.

It is possible to transfer shape from source object to target object in semi-automatic process, if you both object are textured, texture every face of the object uses unique texture coordinates (no overlapping, mirrored, tiled textures), and you created what I called "equivalence map".

Equivalence map is a 2d texture where each texel stores corresponding texture coordinates of the other object. Naturally, you'll want this to be stored in floating-point format. So, you can lookup a texel on "equivalence map", and find out which coordinates this texel uses in original topology.

Now, once you have "equivalente map", you can transfer topology from source to target using this process:

- Render source mesh world position to texture. (use floating-point texture format) That requires a shader that can render mesh to its texture while storing texture format as color. That one is easy.

pixelColor.rgb = vertexPosition.xyz;

- In a target mesh, loop through vertices, for each vertex, look up corresponding coordinate in "equivalence map", then read position from "world position" texture using coordinates you got. I.e.

vertexPosition.xyz = textureLookup(worldPositionTexture, textureLookup(equivalenceMap, vertexTexCoords.xy));

I actually tested this in blender 3d.

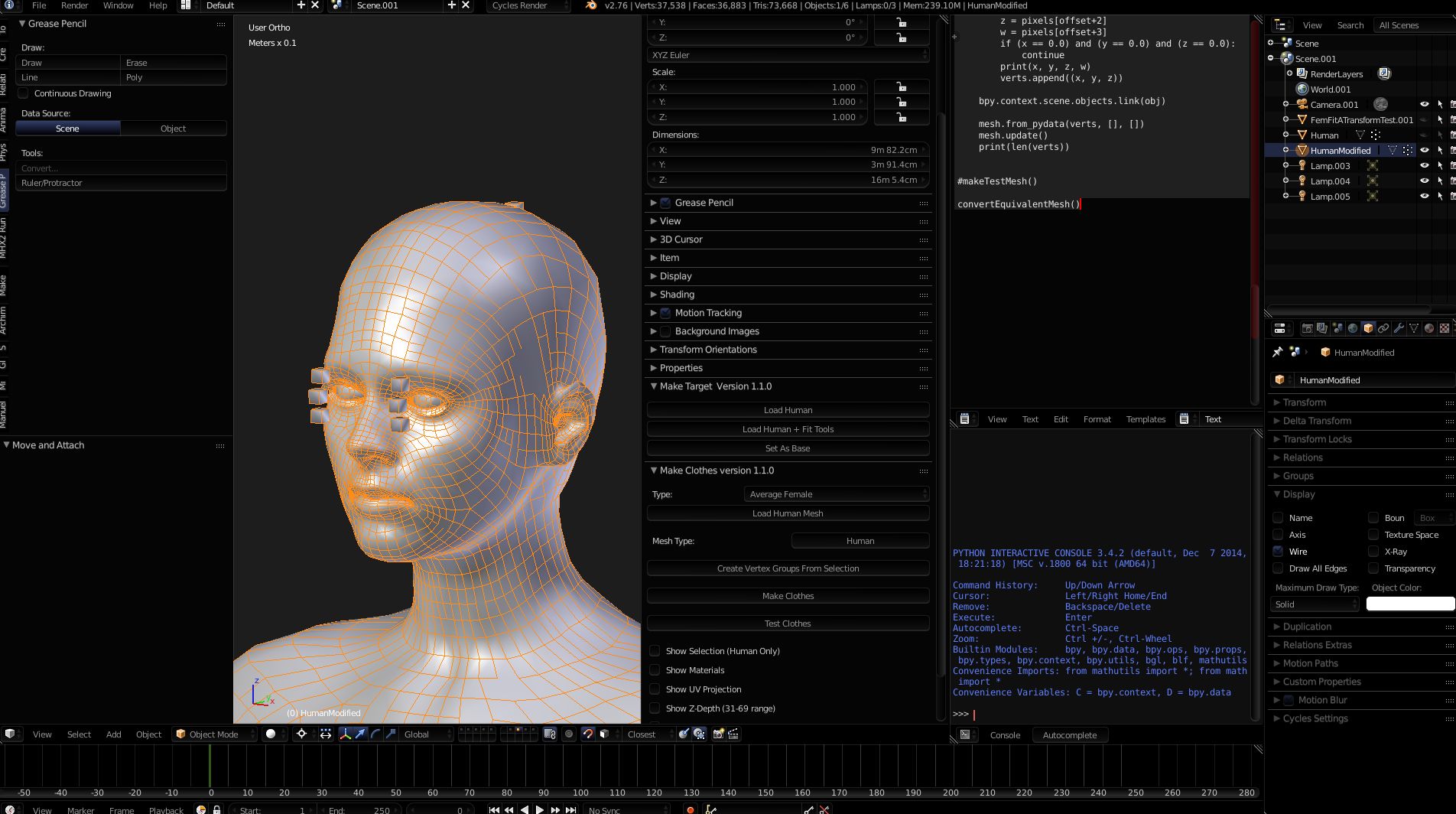

Old model:

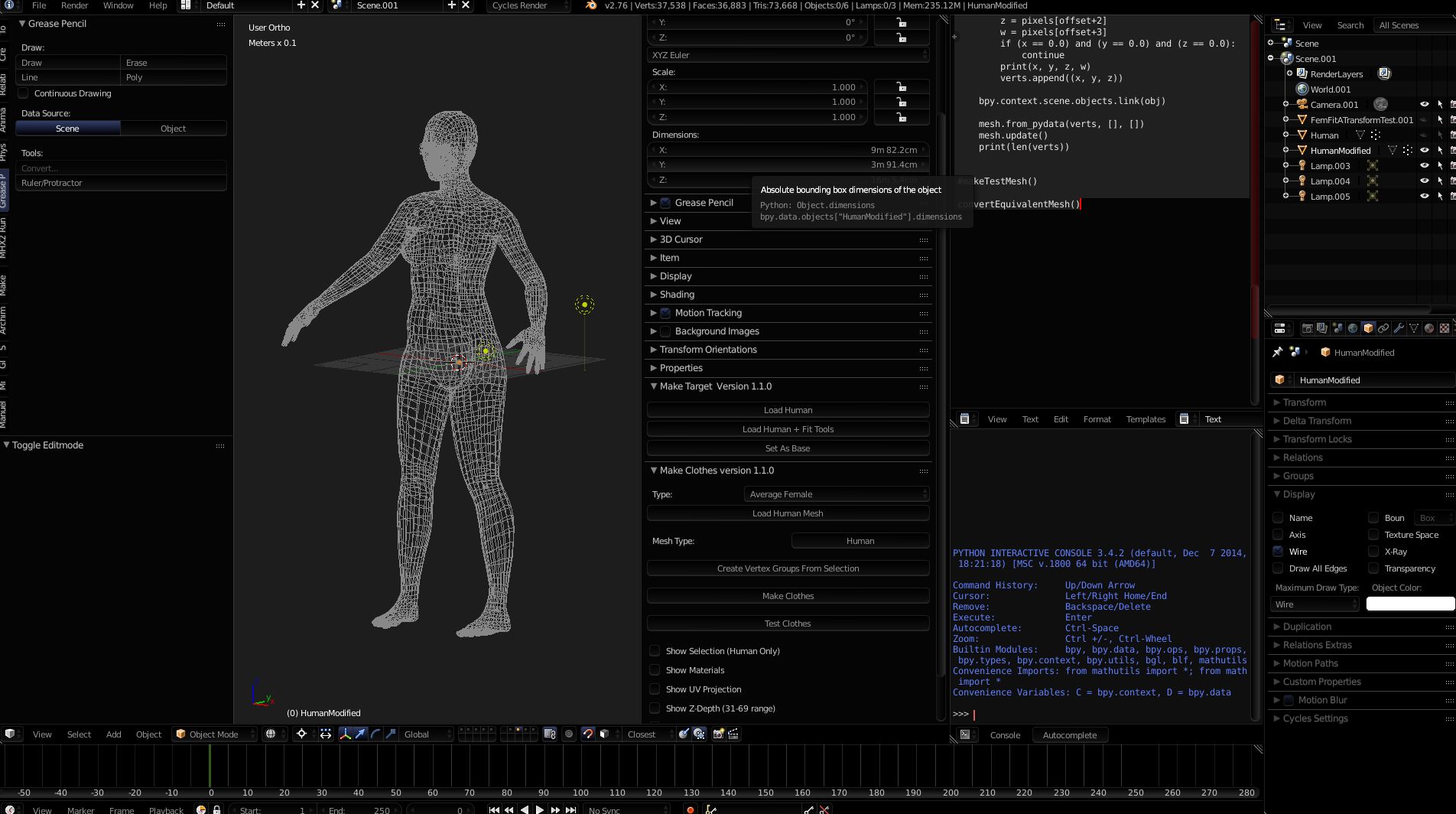

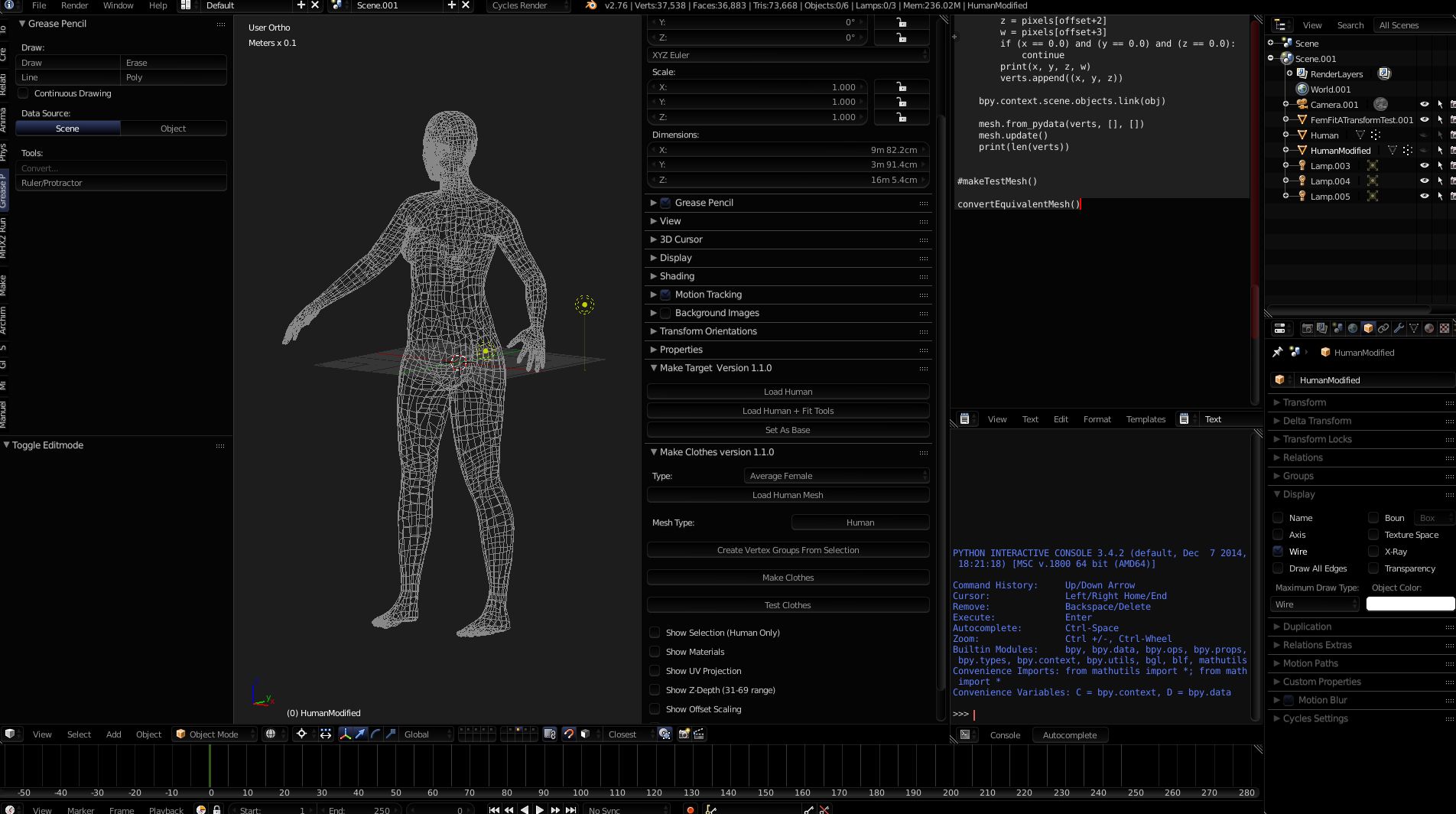

New model:

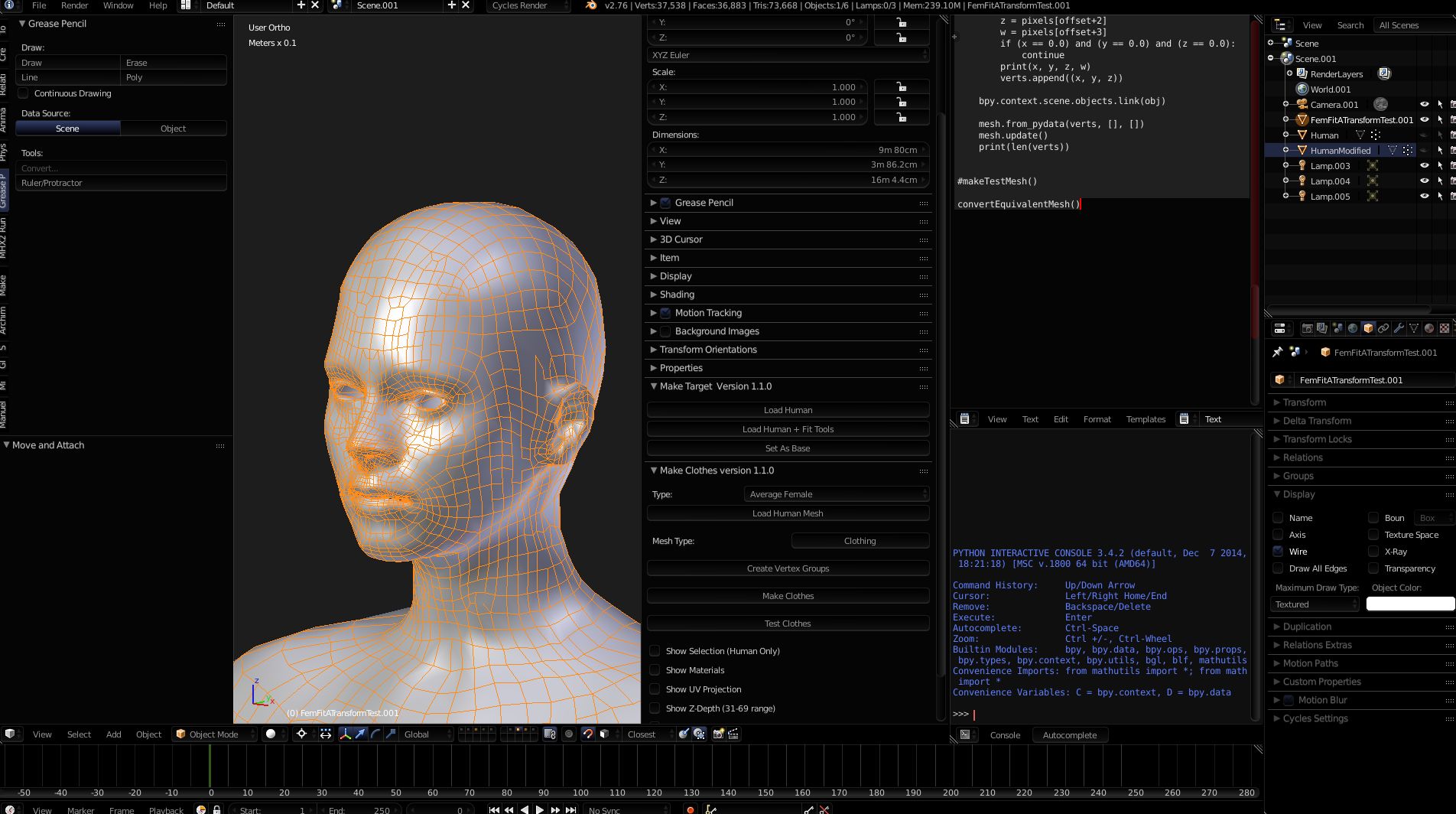

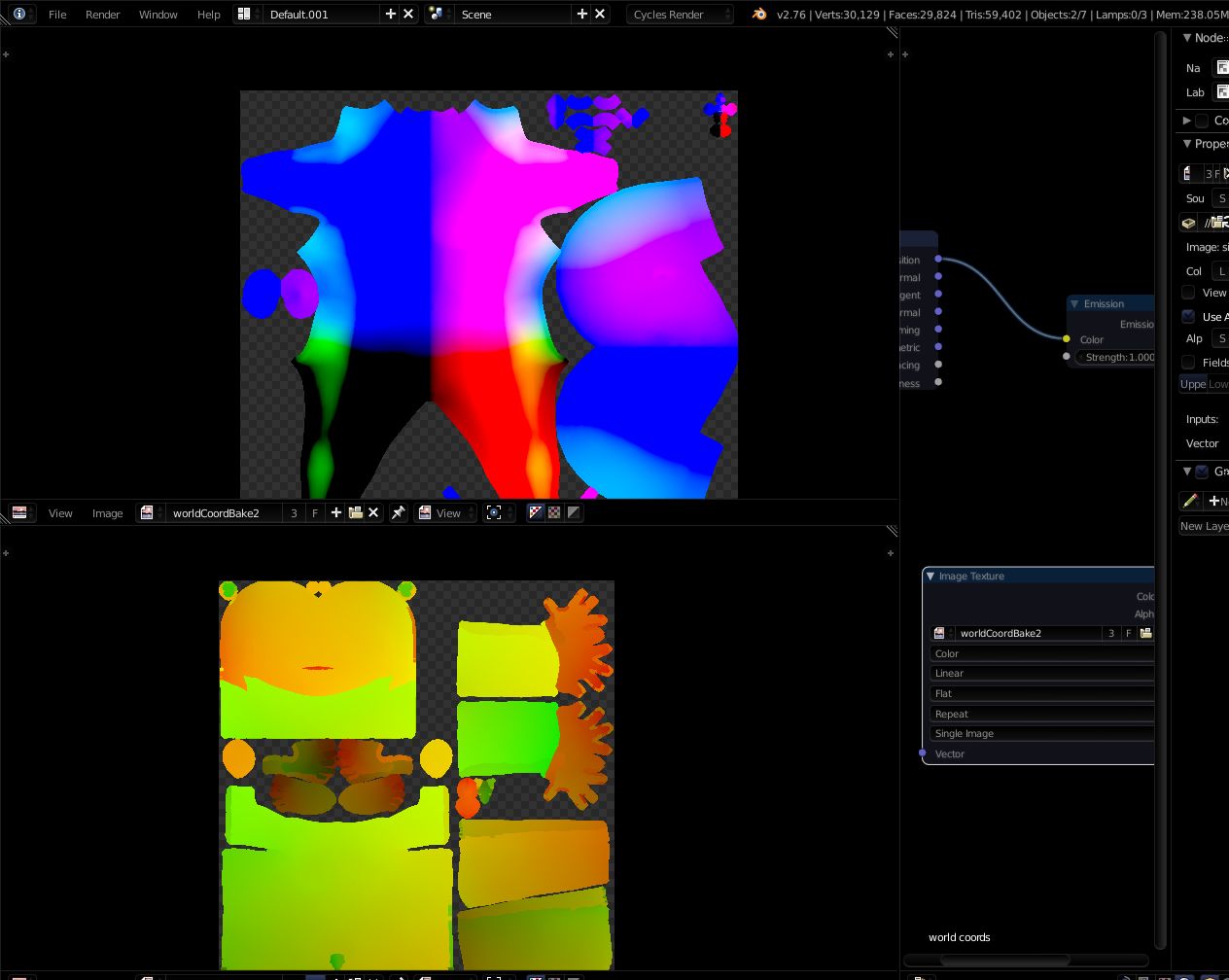

Here's what equivalence maps look like (world position texture above, equivalence map below):

Python code I used:

import bpy, math

def getOffset(img, x, y):

return img.channels * (x + y * img.size[0])

def wrap(val):

result = math.modf(val)[0]

if (result < 0):

return result + 1.0

return result;

def sampleImageOffset(img, u, v):

x = int(wrap(u)*img.size[0])

y = int(wrap(v)*img.size[1])

return getOffset(img, x, y)

def sampleImage(img, u, v, pixels=None):

if not pixels:

pixels = img.pixels

offset = sampleImageOffset(img, u, v)

return (pixels[offset], pixels[offset+1], pixels[offset+2], pixels[offset+3])

def convertEquivalentMesh():

worldCoordTex = bpy.data.images['worldCoordBake2a']

fuseToMkhEquiv = bpy.data.images['FuseToMakeHumanEquivalence']

fuseToMkhUvs = list(fuseToMkhEquiv.pixels)

worldCoordPixels = list(worldCoordTex.pixels)

for obj in bpy.context.selected_objects:

print(obj)

if (obj.type == "MESH"):

print("mesh found")

for face in obj.data.polygons:

for vert, loop in zip(face.vertices, face.loop_indices):

n = obj.data.vertices[vert].normal

p = obj.data.vertices[vert].co

uv = obj.data.uv_layers.active.data[loop].uv

print("{0} {1} {2}", n, p, uv)

print("{0} {1}".format(uv.x, uv.y))

origUv = sampleImage(fuseToMkhEquiv, uv.x, uv.y, fuseToMkhUvs)

print("Orig UV: {0} {1}".format(origUv[0], origUv[1]))

worldCoord = sampleImage(worldCoordTex, origUv[0], origUv[1], worldCoordPixels)

#worldCoord = sampleImage(worldCoordTex, uv.x, uv.y, worldCoordPixels)

if worldCoord[3] == 0.0:

continue

print("worldCoord: {0} {1} {2}".format(worldCoord[0], worldCoord[1], worldCoord[2]))

obj.data.vertices[vert].co.x = worldCoord[0]

obj.data.vertices[vert].co.y = worldCoord[1]

obj.data.vertices[vert].co.z = worldCoord[2]

#obj.data.vertices[vert].co.y = 0.0

def makeTestMesh():

worldCoordTex = bpy.data.images['worldCoordBake']

pixels = list(worldCoordTex.pixels)

w = worldCoordTex.size[0]

h = worldCoordTex.size[1]

bytes = worldCoordTex.channels

numPixels = w*h

print(w, h, bytes, numPixels)

mesh = bpy.data.meshes.new("TestMesh")

obj = bpy.data.objects.new("TestMeshObj", mesh)

obj.show_name = True

verts = []

for i in range(0, numPixels):

offset = i*bytes

x = pixels[offset+0]

y = pixels[offset+1]

z = pixels[offset+2]

w = pixels[offset+3]

if (x == 0.0) and (y == 0.0) and (z == 0.0):

continue

print(x, y, z, w)

verts.append((x, y, z))

bpy.context.scene.objects.link(obj)

mesh.from_pydata(verts, [], [])

mesh.update()

print(len(verts))

#makeTestMesh()

convertEquivalentMesh()

The textures in this examples were created using cycles renderer in blender. To bake original "equivalence map" I simply created a shader that rendered texture coordinates onto either color or emissive channel, and then baked it on the other model. Similar process was used to create "world coordinate" texture - just position input connected to either emissive or color output, followed by bake.